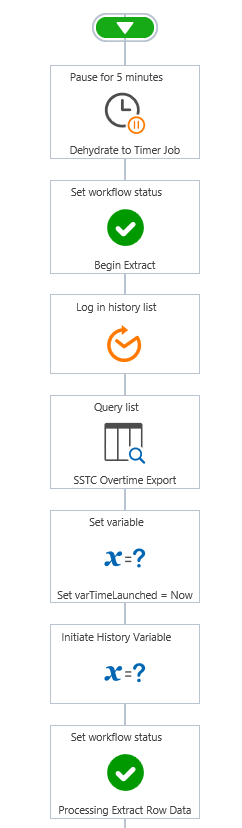

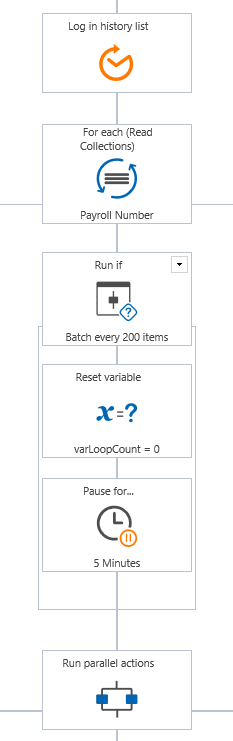

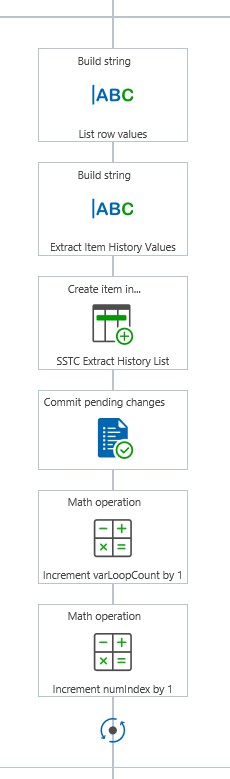

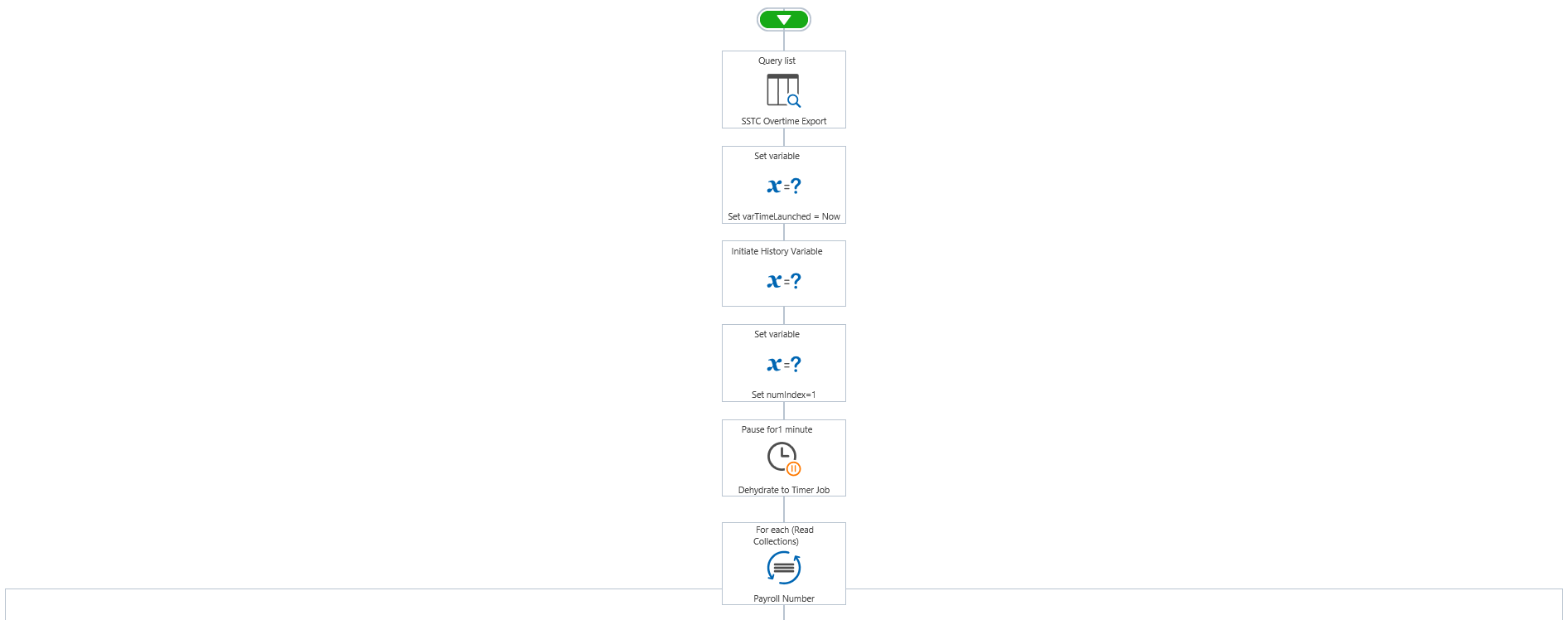

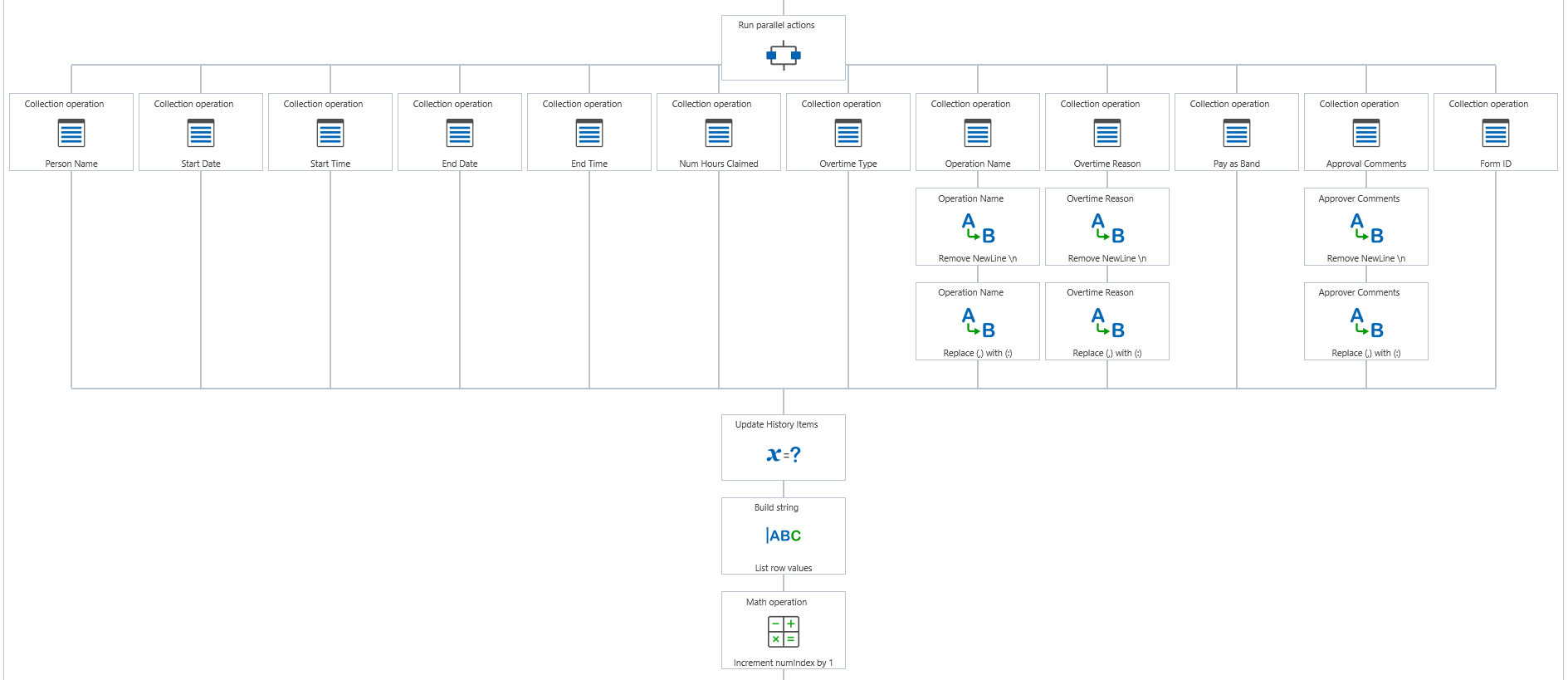

I have built a site workflow that is taking rows from a list (it's an overtime claim list) and building a CSV file for the payroll team to process onwards for reporting. I tackled this by querying the list and passing each of the fields needed into collection variables. Then it pauses for 1 minute to dehydrate the job to the SharePoint timer service. Next, it passes into a for-each loop, where each of the collections are cycled through in turn to produce the variables. This is done with parallel actions (12). Some of the parallel actions need some regex on the output variables to remove commas (we are saving to a CSV at the end). Within the loop, I am building a string value to collect all the row item data into a chunk.

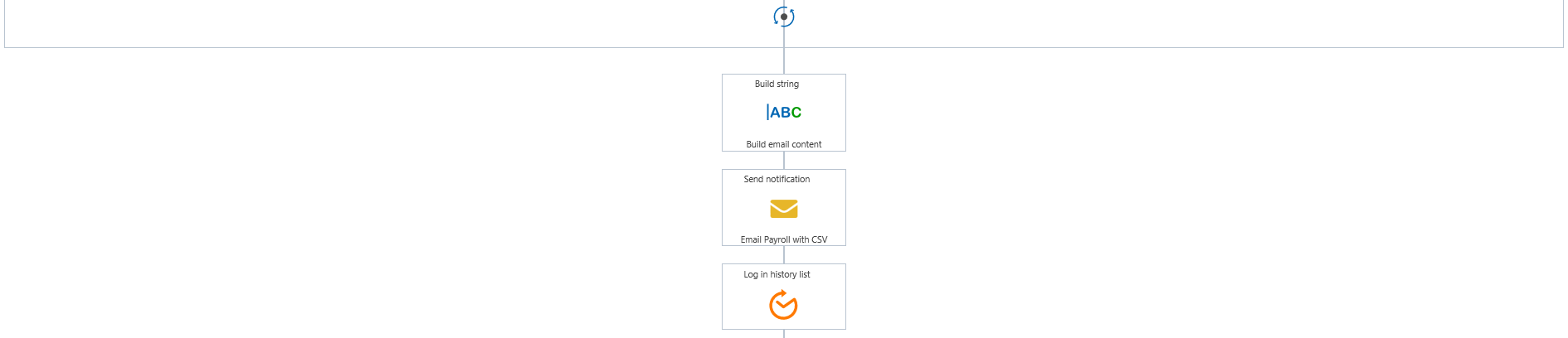

Once exited from the loop, another build string is used to produce headers and then adds the chunk with the appropriate commas to separate. Finally an email goes off to the payroll team with the CSV attached.

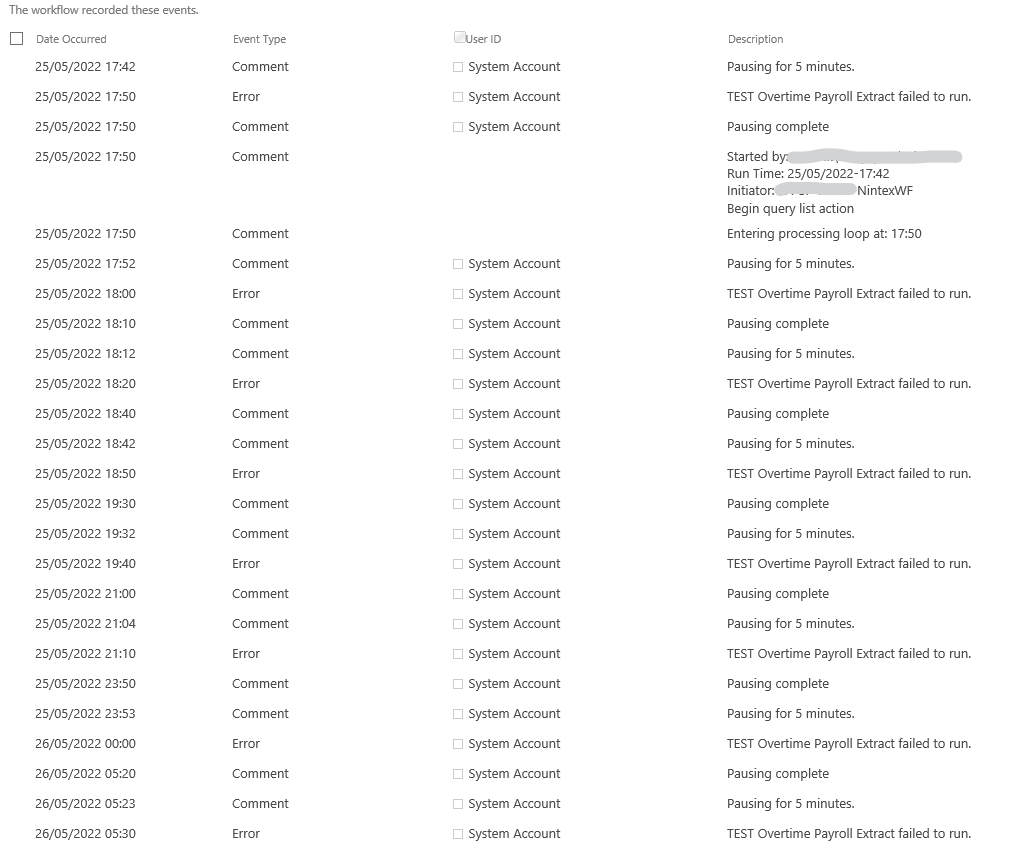

This worked for test, when I only had a few hundred items in the list, but now that has risen to nearly 2500 items, which is not large in SharePoint terms, but I am not sure whether the workflow is able to handle the volume of process actions. With this number of items, the workflow starts quite quickly, but then waits for a lot more than one minute (the pause or 1 minute). I can see a status shows as in progress.

After about 20 minutes, workflow history shows that there is an error. Scrolling to the bottom of the history (a long way down), the error simply states: Error System Account Overtime Payroll Extract failed to run. Am I going about this in the best way, as it seems that I am running into a timeout? When I look at the history, I can see it has recorded a large number of actions and then seems to stop, mid flow during one of the loops.

Is there a way to streamline this or improve the efficiency? If there is a timeout, can it be adjusted, and if so, to what value?

Hopefully someone can help me find a solution to this. Thanks in advance.