I built some workflows in the last part of last year. Many are medium complexity. I’m looking for some guidance on how I can speed some of them up. The under performing workflows are mostly those that make numerous updates to the SharePoint online lists - status columns etc.

The type of column does not seem to have any effect on the time taken, but it seems to take around 30 seconds, to a minute to make one update. The connector is using a list/library connection with a identity that has full site collection access permissions. On one particular workflow that basically sets a flag on each item in a list view (based on date constraints), takes more than two hours to run through the for-each loop. The corpus size in that instance was only about 210 items.

On the previous version of Nintex for SharePoint 2016 on-premises, the same workflow would be done in around 10 minutes.

Is there a method I can use to update list columns via a loop, in a more efficient way? One that will take a fraction of the current amount of time. My fear is that the list view has potential for between 2000 and 2500 items.

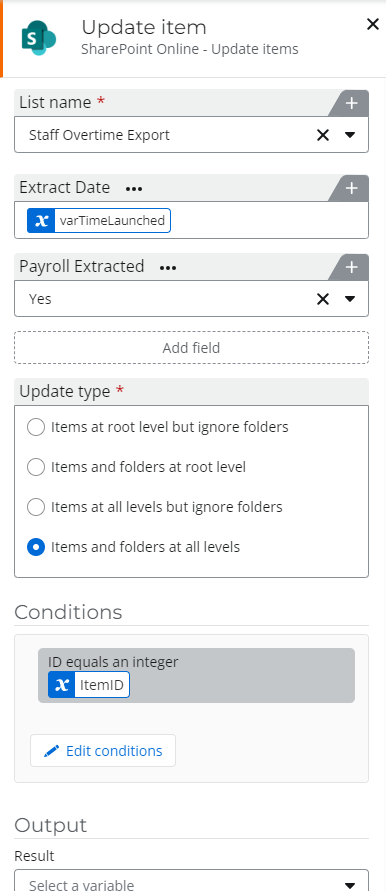

Here is the config on the action that updates a date and yes/no column on the SharePoint site. It runs upon each iteration of the loop.