I have developed a workflow which is used to extract items from a list on a monthly basis for business finance. Below is a description of the approach I have taken.

Step 1: The workflow has to query the list to capture the required items. This is done with a Query List action, filtered by the date range (first to last days in a calendar month). This can result in anything from 800 to 3000 items in the target range.

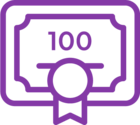

Step 2: We then need to loop through the Items collection and break it into individual collections, one per column needed. There are 14 columns that are of interest.

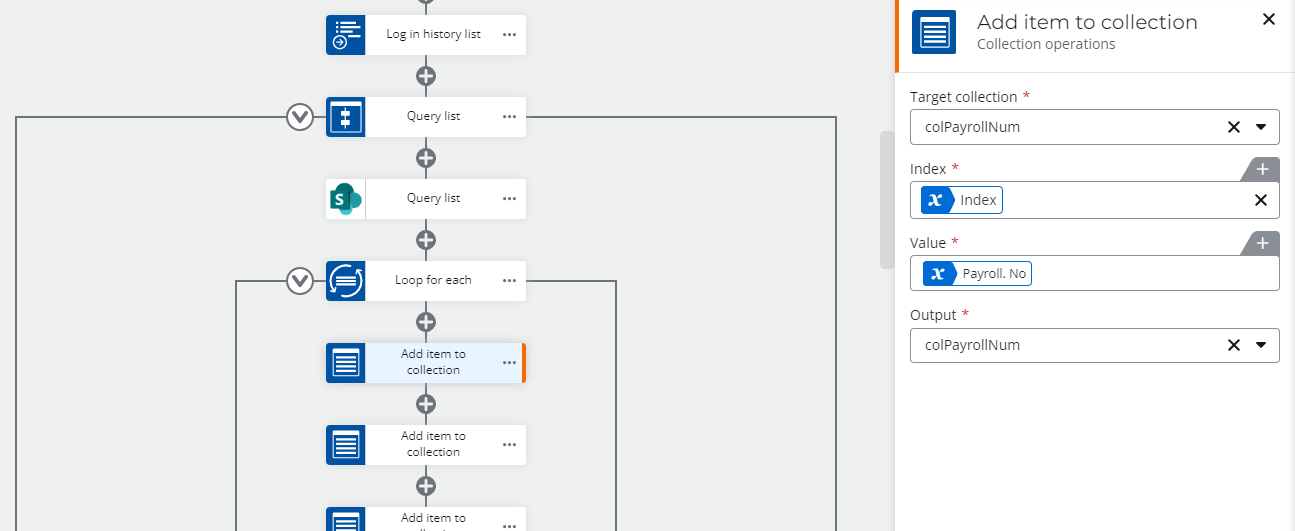

Step 3a: Next, another loop is needed to extract each collection item into a usable variable, which can then be used in the output stage. Within this loop is a Run-If action that batches in chunks of 200, using a row count variable

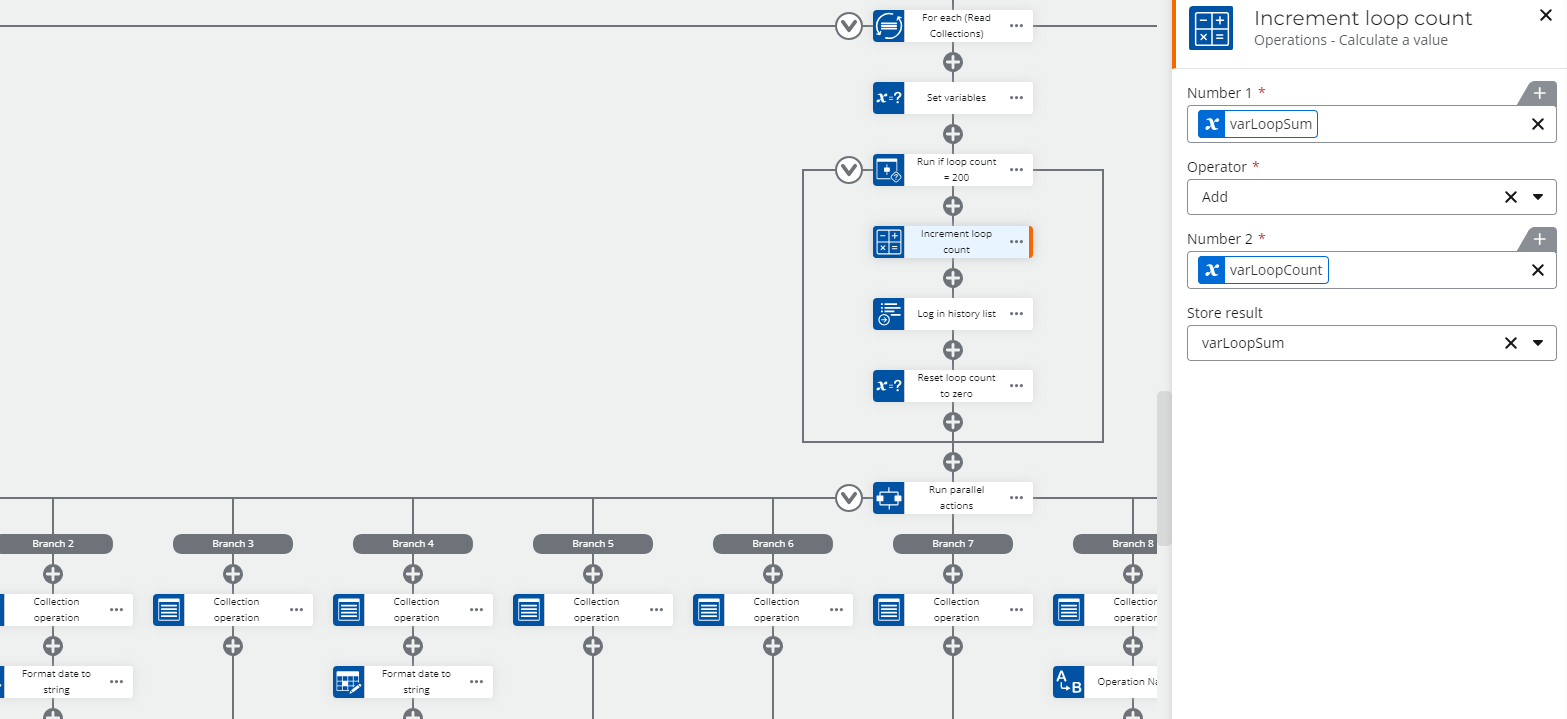

Step 3b: Now the collections are run though a parallel action and apply some date/time formatting as well as Regex to remove problematic special characters and line feeds. Then the column variables are used to build up the rows of column data into a variable varOutputData (CSV format), using Create a text string actions.

Step 3c: To prevent items from being targeted in subsequent runs, a flag is then set to true in the list, which is a Boolean column. Then to aid with management and list views, the current date is added to a date/time column in the list.

Step 3d: Next, some math actions are used to increment the loop and batch count variables by one, for the next iteration. The varOutputData variable is logged into the workflow history.

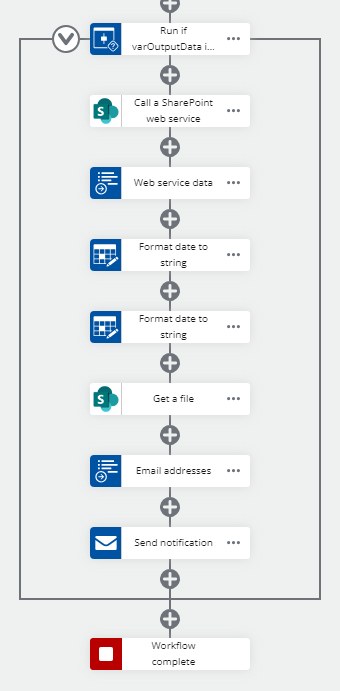

Step 4: Finally, we call a Webservice to create a CSV file in a named document library, format dates into strings and merge the varOutputData variable into the CSV, before attaching it to an email, sent to the finance team.

So, this is how I get the desired data from the SharePoint list, set each affected items as “extracted” together with run date, and then send the resulting CSV data in an email.

The Problem

However, there are actually two problems. One is the time taken to complete, the other is the unexpected workflow terminations.

- For a month containing a target group of 250 list items, the workflow will run for anything up to 8 hours to complete. It is related to the fact that 2 list columns are being written to (Boolean flag and the date). I guess this is just down to the latency incurred when two Cloud based entities are communicating, authenticating and then verifying each list update. I am still looking for a solution to this problem.

- I recently had a month that contained just shy of 1800 items in the target range. I ran the workflow in smaller date chunks to try and circumvent any runtime issues. The first run was against 150 items, and that ran through in about 2 hours. The next two runs were for 120 and 95 items. These also completed. On the fourth run, there were 254 items. That ran for about 5 hours and then terminated. There was nothing at all in the history, just terminated. Looking at the history, my log of the output data showed about 98 rows and “truncated” at its end - not the full history. I had to abandon the run and reset the list items that had half completed. I tried again with only 150 items in range and it worked. I had two instances of terminations in a total of 23 workflow run attempts before I got all the data I needed. In all, it took nearly 2 days, which is insane.

The Plea

What am I doing wrong? Is there a better and more resilient way of handling lists with 1000s of rows? I full accept that my workflow may not be designed in the most efficient way, but I cannot believe that it is normal for such a workflow to take so long to complete.

Has anyone here had more success with this sort of situation? If I just wanted to send a CSV file of all items within range, then that would happen in minutes, so I know the time-consuming element are the list item updates. The problem is that the extract and the update of these columns needs to happen concurrently.

Thanks in advance!